Neural Network Regression Problem with TensorFlow(Part 1)

- Sumit Dey

- Feb 22, 2022

- 4 min read

Updated: Feb 23, 2022

What is TensorFlow?

TensorFlow is a free and open-source end-to-end machine learning library for preprocessing data, modeling data, and serving models. The core open-source library to help you develop and train ML models. Get started quickly by running Colab notebooks directly in your browser.

Why use Tensorflow?

It would be the jump start of your machine learning and deep learning career. Rather than building machine learning and deep learning models from scratch, it's more likely you'll use a library such as TensorFlow. This is because it contains many of the most common machine learning functions you'll want to use.

What is Regression Problem

There are many definitions of the regression problem, however, we would simplify the process to predicting a number, we might want to do the following

For example,

Predict the selling price of a house with the given parameters(rooms, house size, number of bathrooms, etc.)

Predict the medical insurance price for an individual person using their demographics(age, sex, medical condition, etc.)

We're going to set the foundation for how we can take a sample input dataset, build a neural network and discover patterns of these inputs and then make a prediction(number format) based on those inputs.

The architecture of a regression neural network(Typical)

Why typical? There is an infinite number of ways you can write a neural network, however, the following is a generic setup for ingesting a collection of numbers, fining patterns in them and output would be some target number.

Hyperparameter Typical value

Input layer shape The same shape as number of features (e.g. 3 for # bedrooms, # bathrooms, # car spaces in housing price prediction)

Hidden layer(s) Problem specific, minimum = 1, maximum = unlimited

Neurons per hidden layer Problem specific, generally 10 to 100

Output layer shape The same shape as desired prediction shape (e.g. 1 for house price)

Hidden activation Usually ReLU (rectified linear unit)

Output activation None, ReLU, logistic/tanh

Loss function MSE (mean square error) or MAE (mean absolute error)/Huber (combination of MAE/MSE) if outliers.

Creating data to view and fit

We can start our coding now.

Since we're working on a regression problem (predicting a number) let's create some linear data (a straight line) to model.

import numpy as np

import matplotlib.pyplot as plt

# Create features

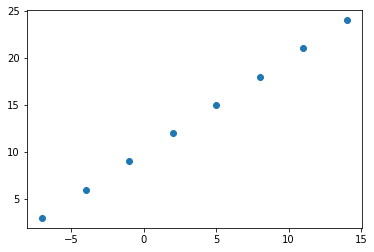

X = np.array([-7.0, -4.0, -1.0, 2.0, 5.0, 8.0, 11.0, 14.0])

# Create labels

y = np.array([3.0, 6.0, 9.0, 12.0, 15.0, 18.0, 21.0, 24.0])

# Visualize it

plt.scatter(X, y);

Our goal is to use X as input and predict output y.

In fact, you need to spend the most time on when you work with neural networks: making sure your input and outputs are in the correct shape.

Modeling steps with TensorFlow

Now we know the input and output of the data, let's build a neural network to model it

In TensorFlow, there are typically 3 fundamental steps to creating and training a model.

Creating a model - piece together the layers of a neural network yourself (using the Functional or Sequential API) or import a previously built model (known as transfer learning).

Compiling a model - defining how a models performance should be measured (loss/metrics) as well as defining how it should improve (optimizer).

Fitting a model - letting the model try to find patterns in the data (how does X get to y).

We would build a model using the Keras Sequential API for our regression data, and here are the steps

import tensorflow as tf

# Set random seed

tf.random.set_seed(42)

# Create a model using the Sequential API

model = tf.keras.Sequential([

tf.keras.layers.Dense(1)

])

# Compile the model

model.compile(loss=tf.keras.losses.mae,# mae is short for mean abs error

# SGD is short for stochastic gradient descent

optimizer=tf.keras.optimizers.SGD(),

metrics=["mae"])

# Fit the model

# model.fit(X, y, epochs=5)

# this will break with TensorFlow 2.7.0+

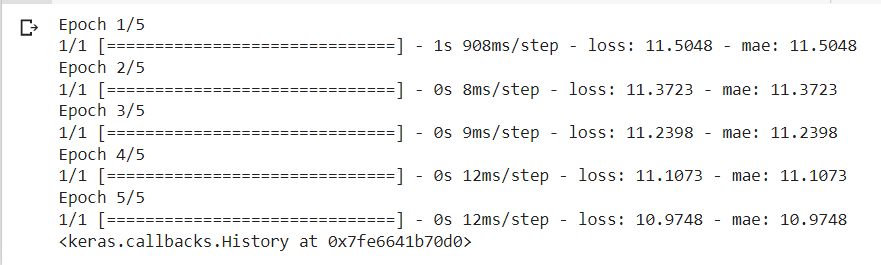

model.fit(tf.expand_dims(X, axis=-1), y, epochs=5)

What do you think the outcome should be if we passed our model with an X value of 17.0?

# Make a prediction with the model

model.predict([17.0])

Now y=12.716..., It doesn't go well, it should be something close to 27.0.

How to improve a Model

Common ways to improve a deep model:

Adding Layers

Increase the number of hidden units

Change the activation function

Change the optimization function

Change the learning rate

Fitting on more data

Fitting for longer

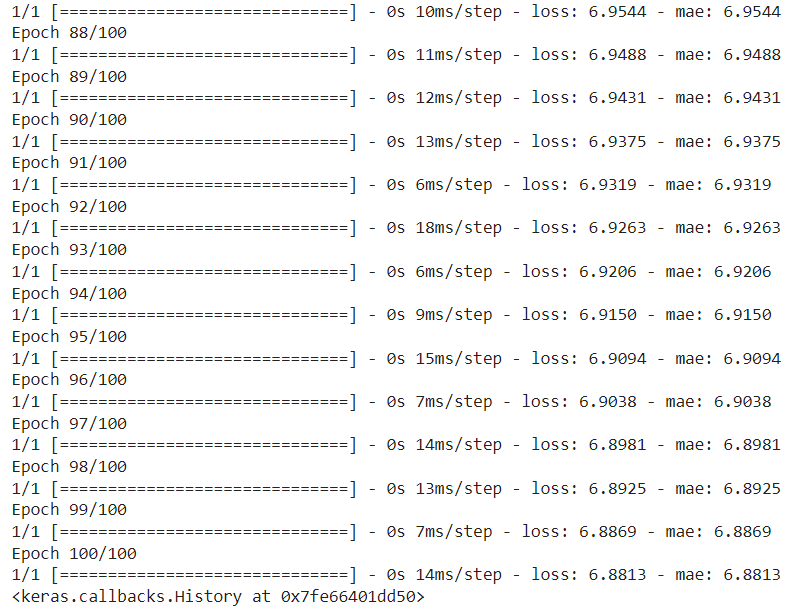

First step: let's keep it simple, all we'll do is train our model for longer (everything else will stay the same).

import tensorflow as tf

# Set random seed

tf.random.set_seed(42)

# Create a model using the Sequential API

model = tf.keras.Sequential([

tf.keras.layers.Dense(1)

])

# Compile the model

model.compile(loss=tf.keras.losses.mae,# mae is short for mean abs error

# SGD is short for stochastic gradient descent

optimizer=tf.keras.optimizers.SGD(),

metrics=["mae"])

# Fit the model

# model.fit(X, y, epochs=5)

# this will break with TensorFlow 2.7.0+

model.fit(tf.expand_dims(X, axis=-1), y, epochs=100) # train for 100 epochs........

........

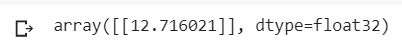

How about we try to predict 17.0 again?

# Try and predict what y would be if X was 17.0

model.predict([17.0]) # the right answer is 27.0 (y = X + 10)

Now y=30.158..., much better! Now it is closer to 27.

In my next post, we would work on a bigger dataset to predict the model and provide more ways to improve the deep model.

Continue Part 2....

Comments