Neural Network Classification Problem with TensorFlow (Predict Image)

- Sumit Dey

- Feb 25, 2022

- 5 min read

Now we are moving from regression problem to classification problem. Generally, classification problems predict whether something is one thing or another.

Following ways, we can explain the classification problem

Predict whether or not someone has cancer detection on their health parameters. This is called binary classification since there are only two options.

Decide whether a photo is of food, a person, or a cat. This is called multi-class classification since there are more than two options.

Predict what categories should be assigned to a Blog article. This is called multi-label classification since a single article could have more than one category assigned.

The architecture of a classification neural network(Typical)

Why typical? There are many ways you can write a neural network that is depending on the type of problem we are working on. There are some fundamentals all deep neural networks contain-

An input layer.

Some hidden layers.

An output layer.

Following are some standard values we'll often use in our classification of neural networks

Hyperparameter Binary Classification Multiclass classification

Input layer shape Number of features Same as binary classification

Hidden layer(s) Problem specific,min=1,max=unlimited Same as binary classification

Neurons per Problem specific, generally 10 to 100 Same as binary classification hidden layer

Output layer shape 1 (one class or the other) 1 per class

Hidden activation Usually ReLU (rectified linear unit) Same as binary classification

Loss function Cross entropy Cross entropy

in TensorFlow) Crossentropy in TensorFlow)

Optimizer SGD (stochastic gradient descent) Same as binary classification

, Adam

Multiclass classification with a larger example

In this session we would do some experiments on multiclass classification, for example, we would build a neural network to predict where a piece of clothing was a shoe, a shirt, a jacket, or anything else.

To start, we'll need some data. The good thing for us is TensorFlow has a multiclass classification dataset known as Fashion MNIST built-in. Meaning we can get started straight away. We can import it using the tf.keras.datasets module.

Resource: The following multiclass classification problem has been adapted from the

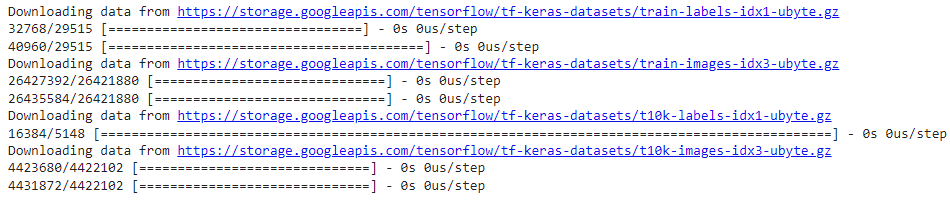

Load data(Train, Test) from the fashion mnist dataset

import tensorflow as tf

from tensorflow.keras.datasets import fashion_mnist

# The data has already been sorted into training and test sets for us

(train_data, train_labels), (test_data, test_labels) = fashion_mnist.load_data()

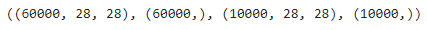

In deep learning, it is most important to see the shape of our data

# Check the shape of our data

train_data.shape, train_labels.shape, test_data.shape, test_labels.shape

There are 60,000 training examples each with shape(28,28) and a label each as well as 10,000 test examples of shape(28,28), let's visualize a single example

# Plot a single example

import matplotlib.pyplot as plt

plt.imshow(train_data[7]);

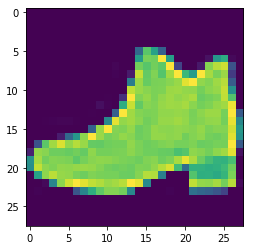

Now check the sample label

# Check our samples label

train_labels[7]

It looks like labels are in the numerical figure, it is good for neural networks, now we need to change to human-readable format.

Let's create a small list of the class names (we can find them on the dataset's Github page)

class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress',

'Coat','Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

# Class names and How many classes are there(this'll be our output shape)?

class_names, len(class_names)

Let's plot another example with a human-readable class name

# Plot an example image and its label

plt.imshow(train_data[17], cmap=plt.cm.binary) # change the colours to B&W

plt.title(class_names[train_labels[17]]);

Wow! it is T-Shirt/Top, let's try a few random images from fashion MNIST

# Plot multiple random images of fashion MNIST

import random

plt.figure(figsize=(7, 7))

for i in range(8):

ax = plt.subplot(4, 4, i + 1)

rand_index = random.choice(range(len(train_data)))

plt.imshow(train_data[rand_index], cmap=plt.cm.binary)

plt.title(class_names[train_labels[rand_index]])

plt.axis(False)

Create Model

Now is time to build a model to figure out the relationship between the pixel values and their labels. Here is the input and output shape

The input shape would be 28X28 tensors(height and weight of the image)

The Output shape will predict for 10 different classes.

After working on many experiments on creating models, we came up with the following model close-to-ideal learning rate and performed pretty well.

# Set random seed

tf.random.set_seed(42)

# Create the model

model = tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),#input layer reshape 28x28

#to 784)

tf.keras.layers.Dense(4, activation="relu"),

tf.keras.layers.Dense(4, activation="relu"),

tf.keras.layers.Dense(10, activation="softmax") # output shape is 10

])

# Compile the model

model.compile(loss=tf.keras.losses.SparseCategoricalCrossentropy(),

optimizer=tf.keras.optimizers.Adam(lr=0.001), # ideal learning rate

metrics=["accuracy"])

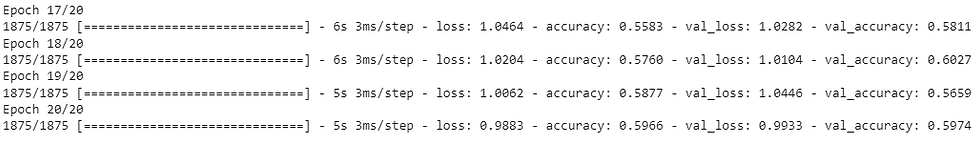

# Fit the model

history = model.fit(train_data,

train_labels,

epochs=20,

validation_data=(test_data, test_labels))----

----

Now Let's evaluate the model

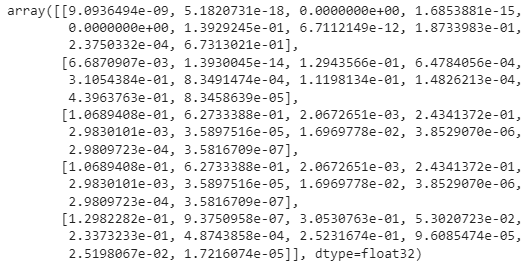

# Make predictions with the most recent model

y_probs = model.predict(test_data) # "probs" is short for probabilities

# View the first 5 predictions

y_probs[:5]

This prediction is not human-readable, let's work on the human-readable prediction.

Let's create a function to get a prediction about an input image.

# Create a function is taking an input image and idex,provide its

# prediction

def plot_random_image(model, image, index, true_labels, classes):

"""Get an input image, plots it and labels it with a predicted and truth label.

Args:

model: a trained model (trained on data similar to what's in images).

image: an image.

index: Index inside the tensor

true_labels: array of ground truth labels for images.

classes: array of class names for images.

Returns:

A plot of a image with a predicted class label from `model`

as well as the truth class label from `true_labels`.

"""

# Create predictions and targets.

target_image = image[index]

pred_probs = model.predict(target_image.reshape(1, 28, 28)) # have to

# reshape to get into right size for model

pred_label = classes[pred_probs.argmax()]

true_label = classes[true_labels[index]]

# Plot the target image

plt.imshow(target_image, cmap=plt.cm.binary)

# Change the color of the titles depending on if the prediction is right

# or wrong

if pred_label == true_label:

color = "green"

else:

color = "red"

# Add xlabel information (prediction/true label)

plt.xlabel("Prediction: {} {:2.0f}% (Actual Lebel: {})".format(pred_label,

100*tf.reduce_max(pred_probs),

true_label),

color=color) # set the color to green or red

Our function is ready to use, now we need to pick any image with an index number and pass it to this function, we would get a prediction of that particular image.

Let's try image index 18 from the tensor

# Plot an example image and its label from test data

plt.imshow(test_data[18], cmap=plt.cm.binary) # change the colours to B&W

plt.title(class_names[train_labels[18]]);

So, index 18 from the test tensor is a Bag, let's try to pass this image in our function

# Check out the image as well as its prediction

plot_random_image(model=model,

image=test_data,

index=18,

true_labels=test_labels,

classes=class_names)

Wow! Our prediction is 88% accurate and it is a "Bag", now try out a negative scenario, let's try index 17 from the test tensor

# Plot an example image and its label fro test data

plt.imshow(test_data[17], cmap=plt.cm.binary) # change the colours to B&W

plt.title(class_names[train_labels[17]]);

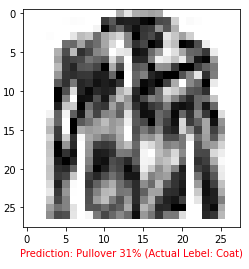

The actual label of this image is Coat. Let's try to pass this image in our function

# Check out a random image as well as its prediction

plot_random_image(model=model,

image=test_data,

index=17,

true_labels=test_labels,

classes=class_names

It's came up with a wrong prediction(Pullover), the actual prediction is Coat.

Did you figure out which predictions the model gets confused on?

It seems to mix up with Coat and Pullover, or Sneaker and an Ankle Boot. The overall shapes of Coat and Pullover, or Sneaker and an Ankle Boot are SIMILAR. The overall shape might be one of the patterns the model has learned and so therefore when two images have a similar shape, their predictions get mixed up. This is a very common behavior of any deep learning model.

Comments